Istio Use Cases

On this page, you'll learn how to make Istio more approachable, comprehensible, and useful for your projects. To illustrate each use case, we'll be using an open-source Spring Boot application called "fleetman."

The setup for these demos includes GKE version v1.20.10-gke.1600 and Istio version 1.11.2, with Prometheus, Tracing, and Stackdriver enabled.

Some people find Kubernetes to be a challenging technology, so why add another layer of complexity with Istio? Through hands-on demonstrations, you'll discover how Istio can help solve complex problems in real-life Kubernetes projects.

One of the most popular use cases for Istio is traffic management, which includes Canary Release. This involves deploying a new version of a software component (new image), but only making it live for a portion of the time, while the old (working) version is still being used. Istio is necessary for implementing canaries effectively, as there isn't a robust method to do so in plain Kubernetes, although it is still possible.

Plain Kubernetes:

As we know, it is absolutely fine to have different pods behind the service object.

In this scenario, a user wants to access the staff service through a regular network request, like HTTP. Kubernetes is responsible for identifying the service and finding the corresponding pod to handle the request. The service has two types of pods labeled as "safe" and "risky" for the same application label "staff-service". Kubernetes uses standard DNS lookups for load balancing, which works on a round-robin basis. As a result, roughly 33% of the requests will land on the "risky" pod, while about 66% will land on one of the "safe" pods. This setup allows for canary testing, but it is not efficient for traffic management. If you want to adjust the percentage of requests going to the "risky" pod, you need to increase or decrease the number of pods accordingly. For instance, to have 10% of requests go to the "risky" pod, you need to run nine "safe" pods. This approach is inefficient, and it becomes even more challenging if you need to have a canary for only 1% of requests.

Canary release with Istio:

We have both staff-service (safe/placeholder & risky versions) pods running with envoy proxies injected into them:

kubectl get pod

kubectl get pod

NAME READY STATUS RESTARTS AGE

api-gateway-6f87d9477f-t5b7v 2/2 Running 0 72s

position-simulator-f6896665b-6kcdd 2/2 Running 0 72s

position-tracker-5b767bb48b-7x27z 2/2 Running 0 72s

staff-service-5f89dc68c-8vkgj 2/2 Running 0 71s

staff-service-risky-version-7775b88f66-w4ktf 2/2 Running 0 71s

vehicle-telemetry-77dfb44c6d-k2j9s 2/2 Running 0 72s

webapp-6bb5c7b569-8rv5g 2/2 Running 0 72s

---

kubectl get pod --show-labels | grep version

staff-service-5f89dc68c-8vkgj 2/2 Running 0 3m23s version=safe

staff-service-risky-version-7775b88f66-w4ktf 2/2 Running 0 3m23s version=risky

Let's test vehicles-driver picture to ensure that it's currently using ClusterIP and set to round-robin loadbalancing:

while true; do curl http://34.141.89.122/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

Adding VirtualService and DestinationRules where we want to ensure that 90% of traffic should go to safe service and 10% to risky service:

Istio rules

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: a-set-of-routing-rules-we-can-call-this-anything # "just" a name for this virtualservice

namespace: default

spec:

hosts:

- fleetman-staff-service.default.svc.cluster.local # The Service DNS (ie the regular K8S Service) name that we're applying routing rules to.

http:

- route:

- destination:

host: fleetman-staff-service.default.svc.cluster.local # The Target DNS name

subset: safe-group # The name defined in the DestinationRule

weight: 90

- destination:

host: fleetman-staff-service.default.svc.cluster.local # The Target DNS name

subset: risky-group # The name defined in the DestinationRule

weight: 10

---

kind: DestinationRule # Defining which pods should be part of each subset

apiVersion: networking.istio.io/v1alpha3

metadata:

name: grouping-rules-for-our-photograph-canary-release # This can be anything you like.

namespace: default

spec:

host: fleetman-staff-service # Service

subsets:

- labels: # SELECTOR.

version: safe # find pods with label "safe"

name: safe-group

- labels:

version: risky

name: risky-group

After applying the rules, we can check the result:

while true; do curl http://34.141.89.122/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

By doing this test, we can clearly see that Istio implements canary release capabilities in more fashioned, elegant way rather than native Kubernetes services.

Session Affinity / Stickiness:

Stickiness means a situation when 90% of the time client will get one version of the application and 10% of the other and then for every subsequent request they get the same response back. There is some options that we can apply to change the load balancing algorithm on a virtual service. Nevertheless, is it possible to use the weighted destination rules to make a single user "stick" to a canary? We have two options: we can use EnvoyFilter https://github.com/istio/istio/issues/37910#issuecomment-1067314543 or we can still use some alternatives(LoadBalancerSettings.ConsistentHashLB):

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: a-set-of-routing-rules-we-can-call-this-anything # "just" a name for this virtualservice

namespace: default

spec:

hosts:

- fleetman-staff-service.default.svc.cluster.local # The Service DNS (ie the regular K8S Service) name that we're applying routing rules to.

http:

- route:

- destination:

host: fleetman-staff-service.default.svc.cluster.local # The Target DNS name

subset: all-staff-service-pods # The name defined in the DestinationRule

# weight: 100 not needed if there's only one.

---

kind: DestinationRule # Defining which pods should be part of each subset

apiVersion: networking.istio.io/v1alpha3

metadata:

name: grouping-rules-for-our-photograph-canary-release # This can be anything you like.

namespace: default

spec:

host: fleetman-staff-service # Service

trafficPolicy:

loadBalancer:

consistentHash:

httpHeaderName: "x-myval"

subsets:

- labels: # SELECTOR.

app: staff-service find all pods with label "staff-service"

name: all-staff-service-pods

So we could make use of SourceIP for example, however, it would be much easier to proof the concept of using httpHeaderName.

# without header

while true; do curl http://34.141.89.122/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

# with header 193 added

while true; do curl --header "x-myval: 193" http://34.141.89.122/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

# different header - 293

while true; do curl --header "x-myval: 293" http://34.141.89.122/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

According to the results, we can clearly see that session affinity is working when you add "x-myval" header to DestinationRule/trafficPolicy without using weighting. However, there is still one interesting part to add - what if we change header name to "myval" instead of using "x-myval"? Let's check:

destinationrule.networking.istio.io/grouping-rules-for-our-photograph-canary-release configured

---

while true; do curl --header "myval: 293" http://34.141.89.122/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

---

while true; do curl --header "myval: 193" http://34.141.89.122/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

Why is it not working? That will be explained in the next section.

Why you need to "Propagate headers":

Istio & service mesh aims to be a non invasive service which does not affect your code. In previous examples, we used only Kubernetes system and did not change anything in the code of fleetman app. However, there is one exception when we talk about distributed tracing and what actually can happen when you do not propagate headers in the application.

First of all, we deploy application and run curl in a loop to simulate some traces and check them in Google Trace:

while true; do curl http://34.107.0.228/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

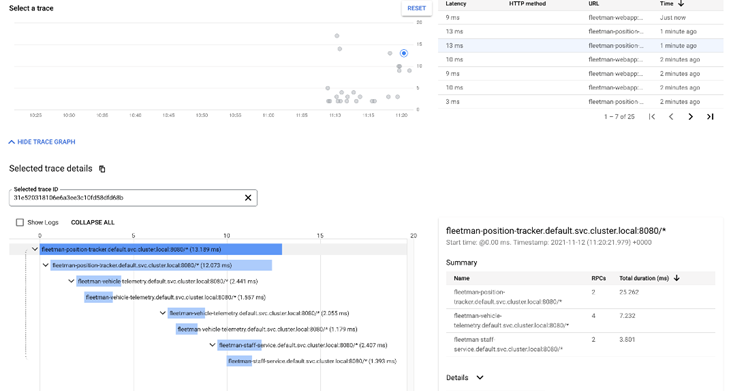

When we select a trace we can see minimum 8 spans of how long it takes for micro services to establish communication: Position-tracker → goes to Vehicle-telemetry and then → to Staff-service.

So now we want to get rid of this code (Feign library interceptor) to ensure that our spring boot applications are not using propagate headers. After we apply new images into the Deployments, we can see that without the automatic header propagation, this whole tracing system is very much less useful than it was.

We have only 2 spans now and we can still get some information from this trace but it's very much truncated. Likewise, if we go back to our previous scenario, we already mentioned that when we wanted to implement stickiness, it did not work if we change header name for anything else rather than x-val. Line 40 of that code explains it. If we do not have header propagation, we will not be able to use stickiness via consistent hashing & headers.

Dark Releases

To maintain consistency across environments (DEV/QA/UAT/PROD), it's recommended to keep them the same. Therefore, it's advisable to have a staging cluster to deploy potentially risky new versions of your microservices, followed by thorough testing. While this approach is considered best practice, the primary disadvantage is that you need a complete duplicate of your entire architecture, effectively doubling your running costs.

Dark release is another approach that allows only specific users to access the service in the live/production environment. Although this approach may not work for most companies as they usually avoid experimental implementations in production environments, there might be some use cases where companies want to reduce cloud expenses. In such cases, dark releases could be a useful option.

We start from deploying 2 webapp versions, original and experimental -

Webapp yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp

spec:

selector:

matchLabels:

app: webapp

replicas: 1

template: # template for the pods

metadata:

labels:

app: webapp

version: original

spec:

containers:

- name: webapp

image: richardchesterwood/istio-fleetman-webapp-angular:6

env:

- name: SPRING_PROFILES_ACTIVE

value: production-microservice

imagePullPolicy: Always

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp-experimental

spec:

selector:

matchLabels:

app: webapp

replicas: 1

template: # template for the pods

metadata:

labels:

app: webapp

version: experimental

spec:

containers:

- name: webapp

image: richardchesterwood/istio-fleetman-webapp-angular:6-experimental

env:

- name: SPRING_PROFILES_ACTIVE

value: production-microservice

imagePullPolicy: Always

deployments

---

webapp-5d9d655b68-rwwq6 2/2 Running 0 35m version=original

webapp-experimental-857f4b4b6b-t7ttk 2/2 Running 0 35m version=experimental

---

staff-service-5f89dc68c-vqcwq 2/2 Running 0 35m version=safe

staff-service-risky-version-7775b88f66-xltrw 2/2 Running 0 35m version=risky

So we have two webapp versions and 2 staff-service versions running in our production environment. Thus, we want to ensure that the production traffic goes through webapp version=original & staff-service version=safe as it is now. However, applications with labels version=experimental & version=risky will be tested by developers only so we are adding header match for those:

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: ingress-gateway-configuration

spec:

selector:

istio: ingressgateway # use Istio default gateway implementation

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*" # Domain name of the external website

---

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: fleetman-webapp

namespace: default

spec:

hosts: # which incoming host are we applying the proxy rules to???

- "*"

gateways:

- ingress-gateway-configuration

http:

- match:

- headers: # IF

x-my-header:

exact: canary

route: # THEN

- destination:

host: fleetman-webapp

subset: experimental

- route: # CATCH ALL

- destination:

host: fleetman-webapp

subset: original

---

kind: DestinationRule

apiVersion: networking.istio.io/v1alpha3

metadata:

name: fleetman-webapp

namespace: default

spec:

host: fleetman-webapp

subsets:

- labels:

version: original

name: original

- labels:

version: experimental

name: experimental

---

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: fleetman-staff-service

namespace: default

spec:

hosts:

- fleetman-staff-service

http:

- match:

- headers: # IF

x-my-header:

exact: canary

route: # THEN

- destination:

host: fleetman-staff-service

subset: risky

- route: # CATCH ALL

- destination:

host: fleetman-staff-service

subset: safe

---

kind: DestinationRule

apiVersion: networking.istio.io/v1alpha3

metadata:

name: fleetman-staff-service

namespace: default

spec:

host: fleetman-staff-service

subsets:

- labels:

version: safe

name: safe

- labels:

version: risky

name: risky

Simple as that, if header matches canary, then go to the experimental-webapp and risky-staff-service version. If not, match any request & catch all and go to the original version. Let's test it:

# original & safe

curl -s http://34.141.55.46/vehicle/City%20Truck | grep title

<title>Fleet Management</title>

curl -s http://34.141.55.46/api/vehicles/driver/City%20Truck

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}%

# if we add header, experimental & risky

curl --header "x-my-header: canary" -s http://34.141.55.46/vehicle/City%20Truck | grep title

<title>Fleet Management Istio Premium Enterprise Edition</title>

curl --header "x-my-header: canary" -s http://34.141.55.46/api/vehicles/driver/City%20Truck

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}%

P.S. In case if you want to test it via browser, you can do that using a browser extension to modify headers, one of them - Modheader:

P.S.2 It is worth to mention that if we did not have propagate headers library in our code, that would not work at all.

Fault Injection

When building a distributed architecture, one of the critical factors to consider is that it's impossible to achieve 100% reliability. However, it's crucial to have fault tolerance, which means that even if some services fail, the application should still function correctly. This section will discuss how you can intentionally introduce faults into your system using Istio. For instance, you can make a service run slower by adding delays to any requests.

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: fleetman-staff-service

namespace: default

spec:

hosts:

- fleetman-staff-service

http:

- match:

- headers:

x-my-header:

exact: canary

fault:

delay:

percentage:

value: 100.0

fixedDelay: 10s

route:

- destination:

host: fleetman-staff-service

subset: risky

- route:

- destination:

host: fleetman-staff-service

subset: safe

First example, we want to add 10 seconds delay to our canary header from previous section so this fault injection is going to apply to our developers testing team.

Testing delay

time curl --header "x-my-header: canary" -s http://34.141.55.46/api/vehicles/driver/City%20Truck

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}curl --header "x-my-header: canary" -s 0.01s user 0.01s system 0% cpu 10.108 total # it takes 10 seconds for image to appear

In the browser, driver image has disappeared. So, with that delay we have definitely uncovered a problem for the developers to fix.

Second example, we want to inject an http abort fault into one of the service and test microservice resiliency:

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: fleetman-vehicle-telemetry

namespace: default

spec:

hosts:

- fleetman-vehicle-telemetry

http:

- fault:

abort:

httpStatus: 503

percentage:

value: 100

route:

- destination:

host: fleetman-vehicle-telemetry

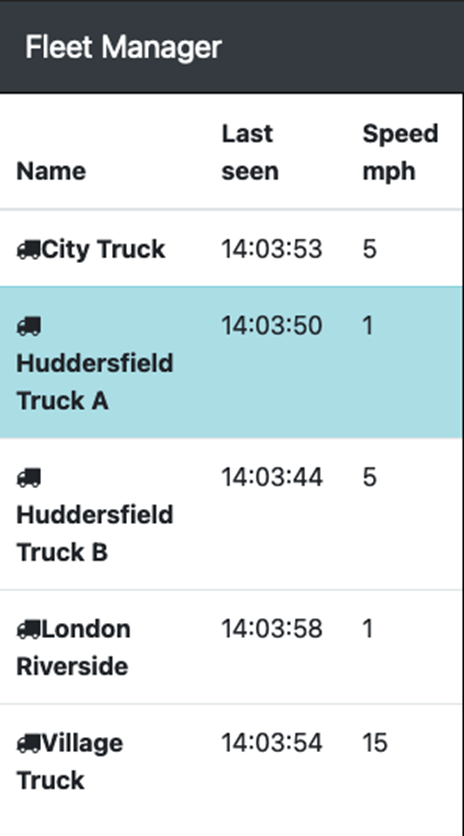

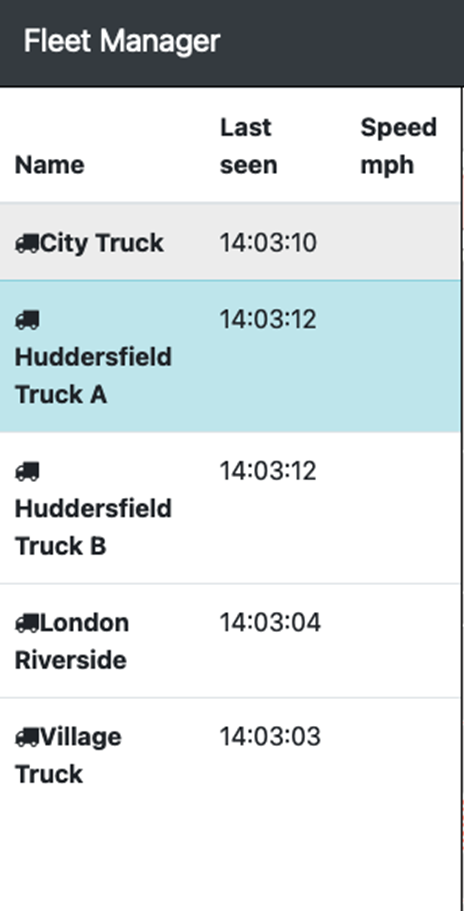

Once we applied this manifest, the speeds disappeared for all of the vehicles, we're not getting the speed readouts -

Before:

After:

Testing delay

kubectl logs position-tracker-5b767bb48b-skrqn

2021-11-16 14:04:50.742 INFO 1 --- [nio-8080-exec-2] c.v.t.e.ExternalVehicleTelemetryService : Telemetry service unavailable. Cannot get speed for vehicle Huddersfield Truck B

2021-11-16 14:04:50.744 INFO 1 --- [nio-8080-exec-2] c.v.t.e.ExternalVehicleTelemetryService : Telemetry service unavailable. Unable to update data for VehiclePosition [name=Huddersfield Truck B, lat=53.6228140, lng=-1.7984330, timestamp=Tue Nov 16 14:04:50 UTC 2021, speed=null

Circuit Breaking

Circuit breaking is an important pattern for creating resilient microservice applications. Circuit breaking allows you to write applications that limit the impact of failures, latency spikes, and other undesirable effects of network peculiarities.

NAME READY STATUS RESTARTS AGE

api-gateway-6f87d9477f-czrlq 2/2 Running 0 65s

position-simulator-f6896665b-wftfb 2/2 Running 0 65s

position-tracker-5b767bb48b-bt8l5 2/2 Running 0 65s

staff-service-68f84fcfcf-wgw9v 2/2 Running 0 64s

staff-service-risky-version-7b45bb84c6-fvfml 2/2 Running 0 63s

vehicle-telemetry-77dfb44c6d-lff89 2/2 Running 0 64s

webapp-5d9d655b68-xb85b 2/2 Running 0 65s

We have 2 versions of staff service where "risky" version of it is broken (bad image). Adding Istio configuration:

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: ingress-gateway-configuration

spec:

selector:

istio: ingressgateway # use Istio default gateway implementation

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*" # Domain name of the external website

---

# All traffic routed to the fleetman-webapp service

# No DestinationRule needed as we aren't doing any subsets, load balancing or outlier detection.

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: fleetman-webapp

namespace: default

spec:

hosts: # which incoming host are we applying the proxy rules to???

- "*"

gateways:

- ingress-gateway-configuration

http:

- route:

- destination:

host: fleetman-webapp

Thus, we want to confirm that one of the services is unhealthy and might cause cascading failures within our infrastructure:

while true; do curl -s -o /dev/null --noproxy "*" -w 'NL=%{time_namelookup} C=%{time_connect} AC=%{time_appconnect} PT=%{time_pretransfer} ST=%{time_starttransfer} T=%{time_total} Code=%{http_code}\n' http://34.141.55.46/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

NL=0.009269 C=0.033977 AC=0.000000 PT=0.034015 ST=0.093005 T=0.093246 Code=200

NL=0.007633 C=0.033045 AC=0.000000 PT=0.033080 ST=1.485889 T=1.486167 Code=200

NL=0.007831 C=0.032791 AC=0.000000 PT=0.032824 ST=0.070498 T=0.070823 Code=200

NL=0.007803 C=0.034128 AC=0.000000 PT=0.034162 ST=4.254433 T=4.254738 Code=200

NL=0.008076 C=0.034095 AC=0.000000 PT=0.034133 ST=0.072507 T=0.072789 Code=200

NL=0.007928 C=0.033723 AC=0.000000 PT=0.033759 ST=3.871482 T=3.871770 Code=500

NL=0.008065 C=0.034657 AC=0.000000 PT=0.034699 ST=0.071891 T=0.072172 Code=200

NL=0.008004 C=0.033517 AC=0.000000 PT=0.033554 ST=3.742410 T=3.742832 Code=500

NL=0.007158 C=0.069582 AC=0.000000 PT=0.069623 ST=4.004408 T=4.004700 Code=500

NL=0.007963 C=0.032543 AC=0.000000 PT=0.032580 ST=0.264422 T=0.264698 Code=500

NL=0.007906 C=0.033503 AC=0.000000 PT=0.033537 ST=0.070977 T=0.071208 Code=200

NL=0.008003 C=0.032293 AC=0.000000 PT=0.032331 ST=2.140184 T=2.140479 Code=500

NL=0.008022 C=0.031373 AC=0.000000 PT=0.031416 ST=0.068085 T=0.068359 Code=200

NL=0.007724 C=0.033285 AC=0.000000 PT=0.033320 ST=2.136710 T=2.136999 Code=200

NL=0.007804 C=0.032801 AC=0.000000 PT=0.032834 ST=0.069937 T=0.070214 Code=200

NL=0.007770 C=0.033711 AC=0.000000 PT=0.033745 ST=1.102414 T=1.102702 Code=500

NL=0.007991 C=0.033430 AC=0.000000 PT=0.033466 ST=4.116621 T=4.127109 Code=500

NL=0.007881 C=0.135206 AC=0.000000 PT=0.135283 ST=0.403586 T=0.403874 Code=500

NL=0.007916 C=0.032196 AC=0.000000 PT=0.032232 ST=0.066900 T=0.067106 Code=200

NL=0.007763 C=0.032716 AC=0.000000 PT=0.032754 ST=2.150330 T=2.151754 Code=500

NL=0.007878 C=0.032497 AC=0.000000 PT=0.032530 ST=0.068819 T=0.069106 Code=200

We can observe that we get multiple 500 responses, and some requests are taking more than 4 seconds to respond. Configuring the circuit breaker and let's test what we get after applying it:

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: circuit-breaker-for-the-entire-default-namespace

spec:

host: "fleetman-staff-service.default.svc.cluster.local" # This is the name of the k8s service that we're configuring

trafficPolicy:

outlierDetection: # Circuit Breakers HAVE TO BE SWITCHED ON

maxEjectionPercent: 100

consecutiveErrors: 2

interval: 10s

baseEjectionTime: 30s

while true; do curl http://34.141.55.46/api/vehicles/driver/City%20Truck; echo; sleep 0.5; done

{"timestamp":"2021-11-16T16:00:25.348+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"timestamp":"2021-11-16T16:00:27.554+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"timestamp":"2021-11-16T16:00:36.701+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"timestamp":"2021-11-16T16:00:44.191+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"timestamp":"2021-11-16T16:00:47.670+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"timestamp":"2021-11-16T16:01:02.824+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"timestamp":"2021-11-16T16:01:04.934+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

#####

...70s of continuous 1.jpg requests

####

{"timestamp":"2021-11-16T16:02:32.839+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"timestamp":"2021-11-16T16:02:36.017+0000","status":500,"error":"Internal Server Error","message":"status 502 reading RemoteStaffMicroserviceCalls#getDriverFor(String)","path":"//vehicles/driver/City%20Truck"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/placeholder.png"}

{"name":"Driver Name","photo":"https://istio.s3.amazonaws.com/1.jpg"}

From the test results, we can see that after couple of 502 failures, Istio ejected the faulty service pod from the mesh for about 70 seconds as we specified in DestinationRule manifest.